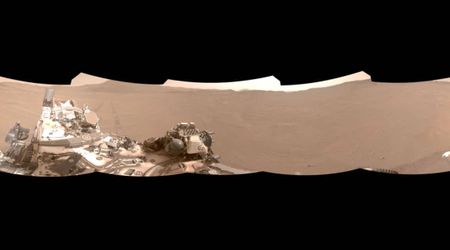

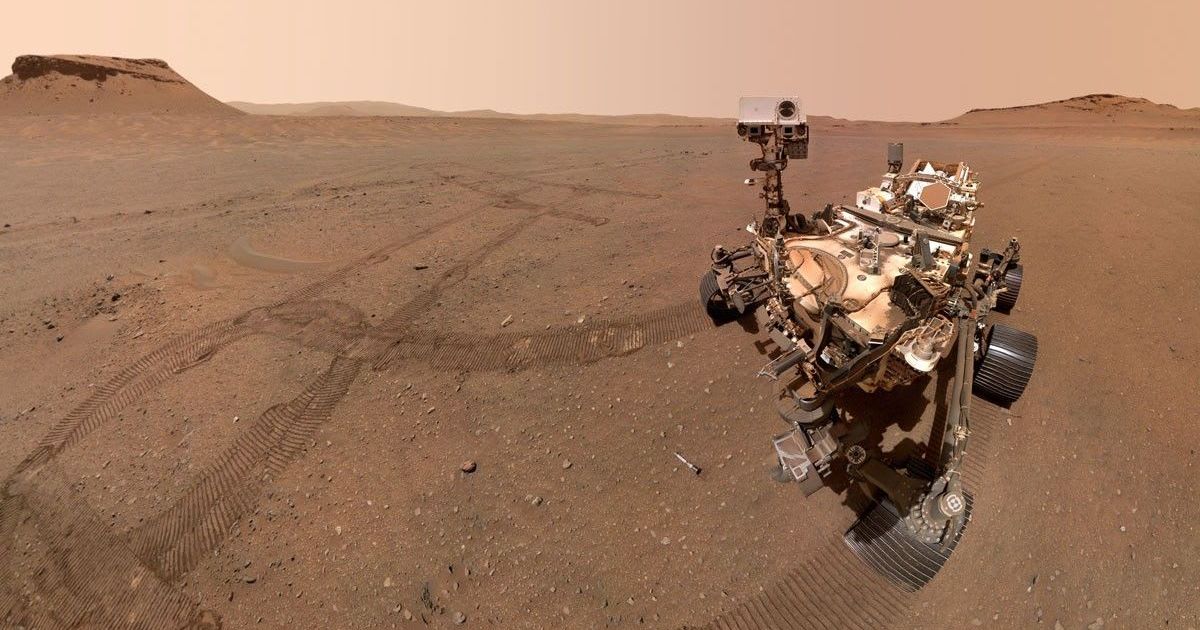

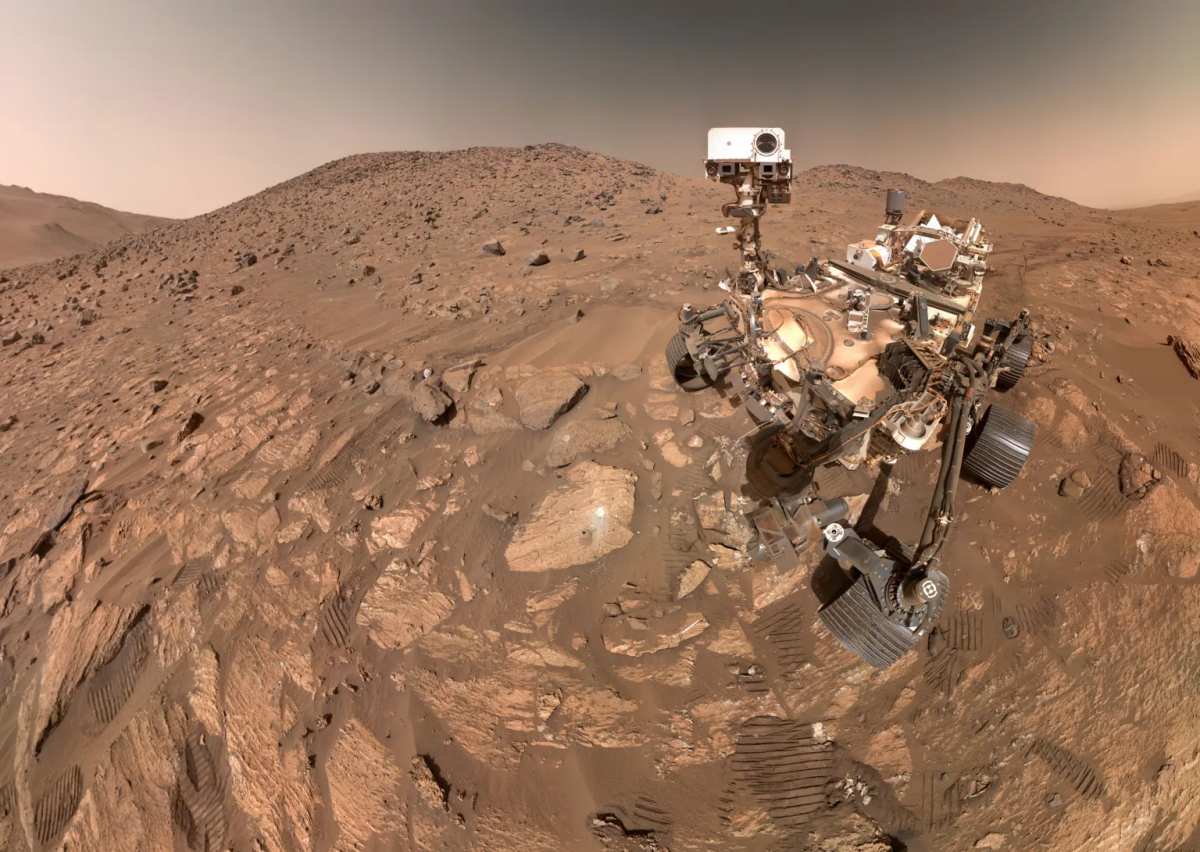

NASA's Perseverance rover successfully completes first AI-directed drive on Mars

NASA’s Jet Propulsion Laboratory has shown that artificial intelligence can be used to guide vehicles 140 million miles away. On December 8 and 10 last year, the Perseverance Mars rover completed the first drives on another world that were planned by AI instead of typical human rover planners.

“This demonstration shows how far our capabilities have advanced and broadens how we will explore other worlds,” said NASA Administrator Jared Isaacman in a statement. “Autonomous technologies like this can help missions to operate more efficiently, respond to challenging terrain, and increase science return as distance from Earth grows. It’s a strong example of teams applying new technology carefully and responsibly in real operations.”

The demonstration saw visual language models—a kind of generative AI—study the data stored in JPL's surface mission dataset and use the same imagery used by human planners to figure out waypoints—locations at which the rover processes fresh instructions. The vast distance that separates the Red Planet from Earth creates a communication lag, making real-time remote operation of a rover impossible. For the past 28 years, humans have prepared navigational maps for the rovers by analyzing the terrain and status data. In such maps, two points are kept 100 meters apart to avoid any hazards. Once completed, they send the maps via NASA’s Deep Space Network to the rover to follow.

But, for Perseverance’s rover, generative AI did something astonishing on the 1,707th and 1,709th Martian days. It analyzed high-resolution images captured by a sophisticated camera aboard NASA’s Mars Reconnaissance Orbiter. The images provided details of the Martian terrain—bedrock, outcrops, boulder fields, and sand ripples—eventually generating a continuous path with waypoints. Next, engineers checked whether the AI’s instructions are fully compatible with the rover’s flight software by running them in a virtual replica of the rover at JPL. Then they sent commands to Perseverance on Mars, where AI propelled the rover to move across a distance of 210 meters on December 8 and 246 meters on December 10, 2025.

“The fundamental elements of generative AI are showing a lot of promise in streamlining the pillars of autonomous navigation for off-planet driving: perception (seeing the rocks and ripples), localization (knowing where we are), and planning and control (deciding and executing the safest path),” said Vandi Verma, a space roboticist at JPL and a member of the Perseverance engineering team, in the statement by NASA. “We are moving towards a day where generative AI and other smart tools will help our surface rovers handle kilometer-scale drives while minimizing operator workload, and flag interesting surface features for our science team by scouring huge volumes of rover images.”

In the NASA statement, Matt Wallace, manager of JPL’s Exploration Systems Office, said, “Imagine intelligent systems not only on the ground at Earth, but also in edge applications in our rovers, helicopters, drones, and other surface elements trained with the collective wisdom of our NASA engineers, scientists, and astronauts.” According to him, that is the game-changing technology that is required to establish the infrastructure and systems that will ensure sustained human presence on the Moon and take Americans to Mars and beyond.

More on Starlust

NASA's mission to bring back Mars sample halts in its tracks

New evidence shows Mars was once a 'blue planet' covered by a massive ocean